Get the latest tech news

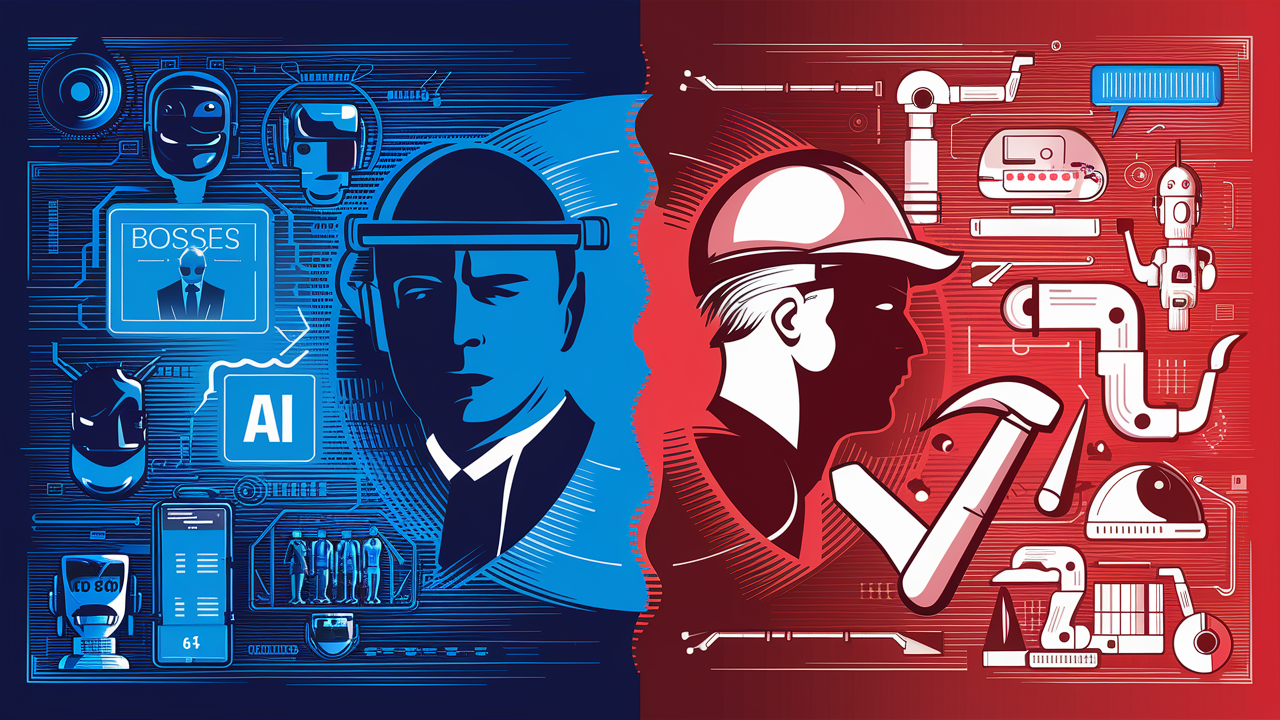

“Copyright traps” could tell writers if an AI has scraped their work

The technique has been used throughout history, but now could be a tool in one of the biggest fights in artificial intelligence.

Now they have a new way to prove it: “c opyright traps ” developed by a team at Imperial College London, pieces of hidden text that allow writers and publishers to subtly mark their work in order to later detect whether it has been used in AI models or not. A number of publishers and writers are in the middle of litigation against tech companies, claiming their intellectual property has been scraped into AI training data sets without their permission. In contrast, smaller models, which are gaining popularity and can be run on mobile devices, memorize less and are thus less susceptible to membership inference attacks, which makes it harder to determine whether or not they were trained on a particular copyrighted document, says Gautam Kamath, an assistant computer science professor at the University of Waterloo, who was not part of the research.

Or read this on r/technology