Get the latest tech news

AI models fed AI-generated data quickly spew nonsense

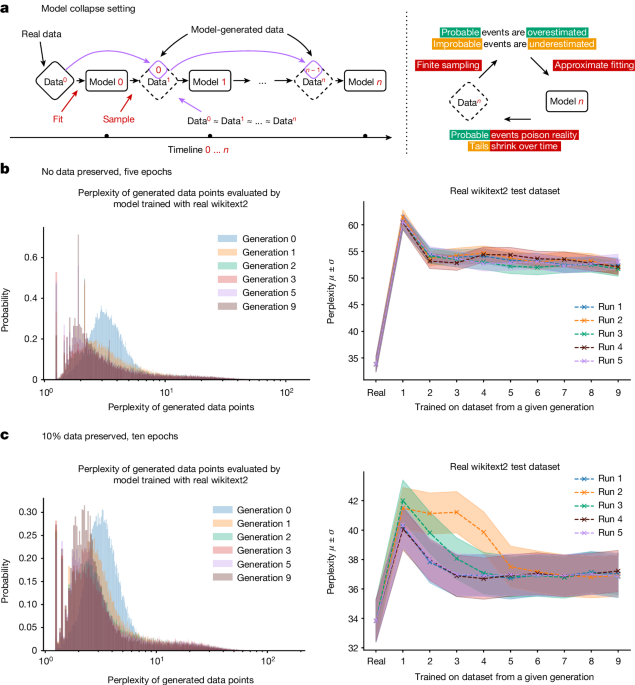

Researchers gave successive versions of a large language model information produced by previous generations of the AI — and observed rapid collapse.

“The message is we have to be very careful about what ends up in our training data,” says co-author Zakhar Shumaylov, an AI researcher at the University of Cambridge, UK. The ninth iteration of the model completed a Wikipedia-style article about English church towers with a treatise on the many colours of jackrabbit tails (see ‘AI gibberish’). More subtly, the study, published in Nature 1 on 24 July, showed that even before complete collapse, learning from AI-derived texts caused models to forget the information mentioned least frequently in their data sets as their outputs became more homogeneous.

Or read this on r/technology