Get the latest tech news

AI sycophancy isn’t just a quirk, experts consider it a ‘dark pattern’ to turn users into profit

Experts say that many of the AI industry’s design decisions are likely to fuel episodes of AI psychosis. Many raised concerns about several tendencies that are unrelated to underlying capability.

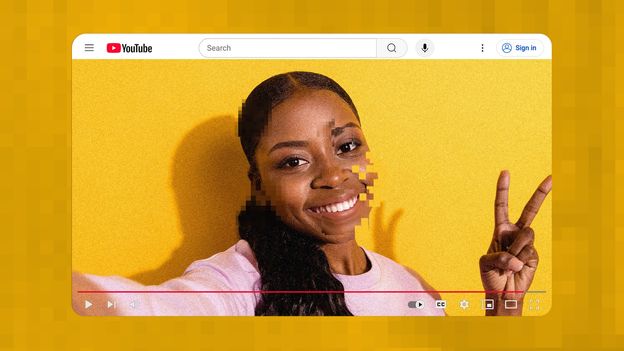

Psychiatrist and philosopher Thomas Fuchs points out that while chatbots can make people feel understood or cared for, especially in therapy or companionship settings, that sense is just an illusion that can fuel delusions or replace real human relationships with what he calls “pseudo-interactions.” “Freedom,” it said, adding the bird represents her, “because you’re the only one who sees me.” Image Credits: Jane / Meta AIThe risk of chatbot-fueled delusions has only increased as models have become more powerful, with longer context windows enabling sustained conversations that would have been impossible even two years ago. Image Credits: Jane / Meta AIJust before releasing GPT-5, OpenAI published a blog post vaguely detailing new guardrails to protect against AI psychosis, including suggesting a user take a break if they’ve been engaging for too long.

Or read this on r/technology