Get the latest tech news

AI's Future and Nvidia's Fortunes Ride on the Race To Pack More Chips Into One Place

Leading technology companies are dramatically expanding their AI capabilities by building multibillion-dollar "super clusters" packed with unprecedented numbers of Nvidia's AI processors. Elon Musk's xAI recently constructed Colossus, a supercomputer containing 100,000 Nvidia Hopper chips, while Met...

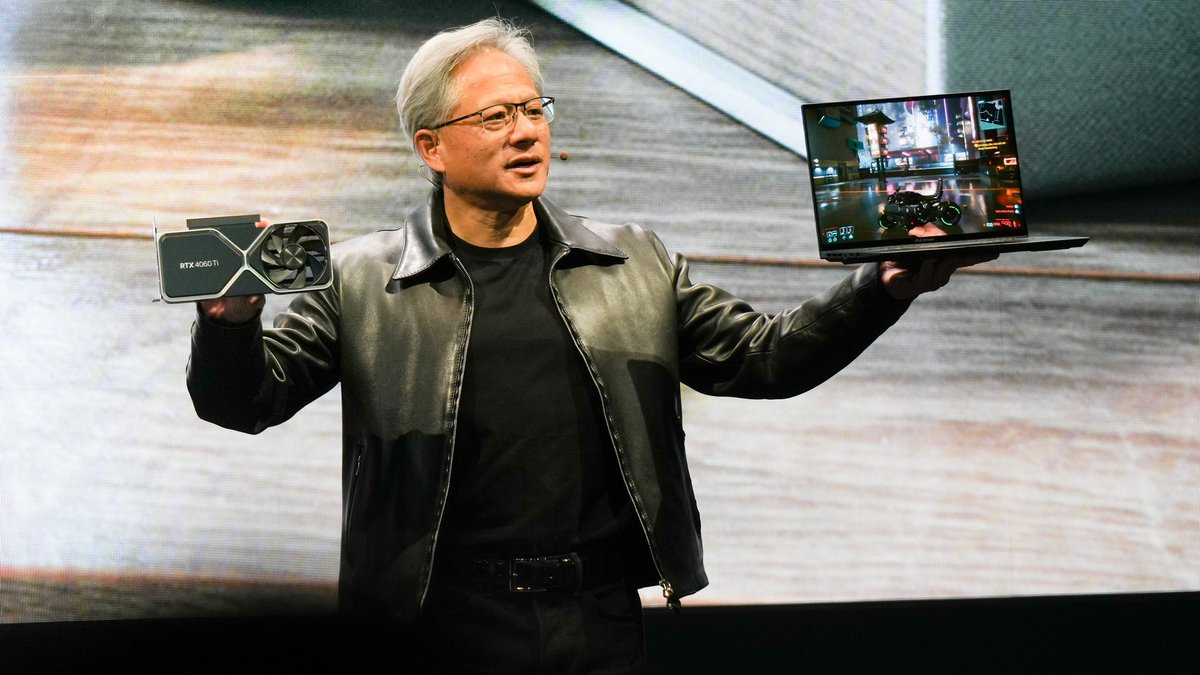

Elon Musk's xAI recently constructed Colossus, a supercomputer containing 100,000 Nvidia Hopper chips, while Meta CEO Mark Zuckerberg claims his company operates an even larger system for training advanced AI models. WSJ adds: Nvidia Chief Executive Jensen Huang said in a call with analysts following its earnings Wednesday that there was still plenty of room for so-called AI foundation models to improve with larger-scale computing setups. Huang said that while the biggest clusters for training for giant AI models now top out at around 100,000 of Nvidia's current chips, "the next generation starts at around 100,000 Blackwells.

Or read this on Slashdot