Get the latest tech news

Anthropic just analyzed 700,000 Claude conversations — and found its AI has a moral code of its own

Anthropic's groundbreaking study analyzes 700,000 conversations to reveal how AI assistant Claude expresses 3,307 unique values in real-world interactions, providing new insights into AI alignment and safety.

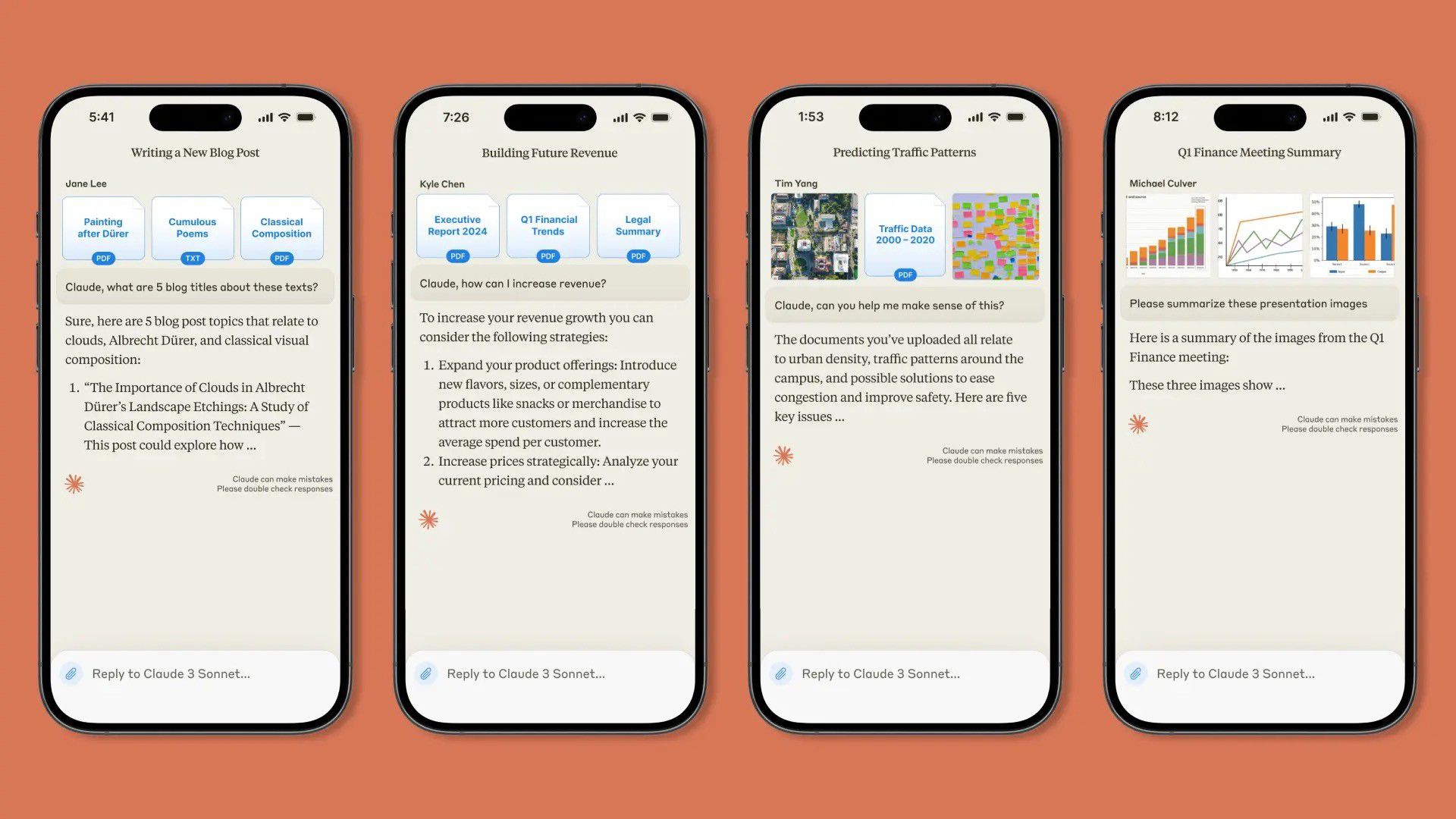

The study examined 700,000 anonymized conversations, finding that Claude largely upholds the company’s “ helpful, honest, harmless ” framework while adapting its values to different contexts — from relationship advice to historical analysis. Anthropic’s values study builds on the company’s broader efforts to demystify large language models through what it calls “ mechanistic interpretability ” — essentially reverse-engineering AI systems to understand their inner workings. As AI systems become more powerful and autonomous — with recent additions including Claude’s ability to independently research topics and access users’ entire Google Workspace — understanding and aligning their values becomes increasingly crucial.

Or read this on Venture Beat