Get the latest tech news

Anthropic researchers discover the weird AI problem: Why thinking longer makes models dumber

Anthropic research reveals AI models perform worse with extended reasoning time, challenging industry assumptions about test-time compute scaling in enterprise deployments.

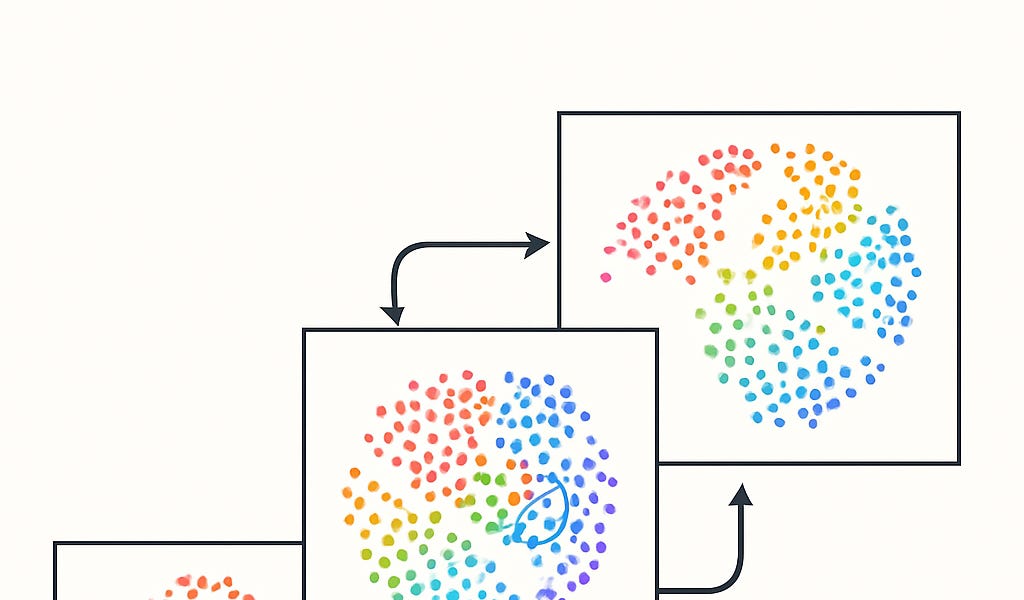

Artificial intelligence models that spend more time “thinking” through problems don’t always perform better — and in some cases, they get significantly worse, according to new research from Anthropic that challenges a core assumption driving the AI industry’s latest scaling efforts. The study, led by Anthropic AI safety fellow Aryo Pradipta Gema and other company researchers, identifies what they call “ inverse scaling in test-time compute,” where extending the reasoning length of large language models actually deteriorates their performance across several types of tasks. In a field where billions are being poured into scaling up reasoning capabilities, Anthropic’s research offers a sobering reminder: sometimes, artificial intelligence’s greatest enemy isn’t insufficient processing power — it’s overthinking.

Or read this on Venture Beat