Get the latest tech news

Anthropic says some Claude models can now end ‘harmful or abusive’ conversations

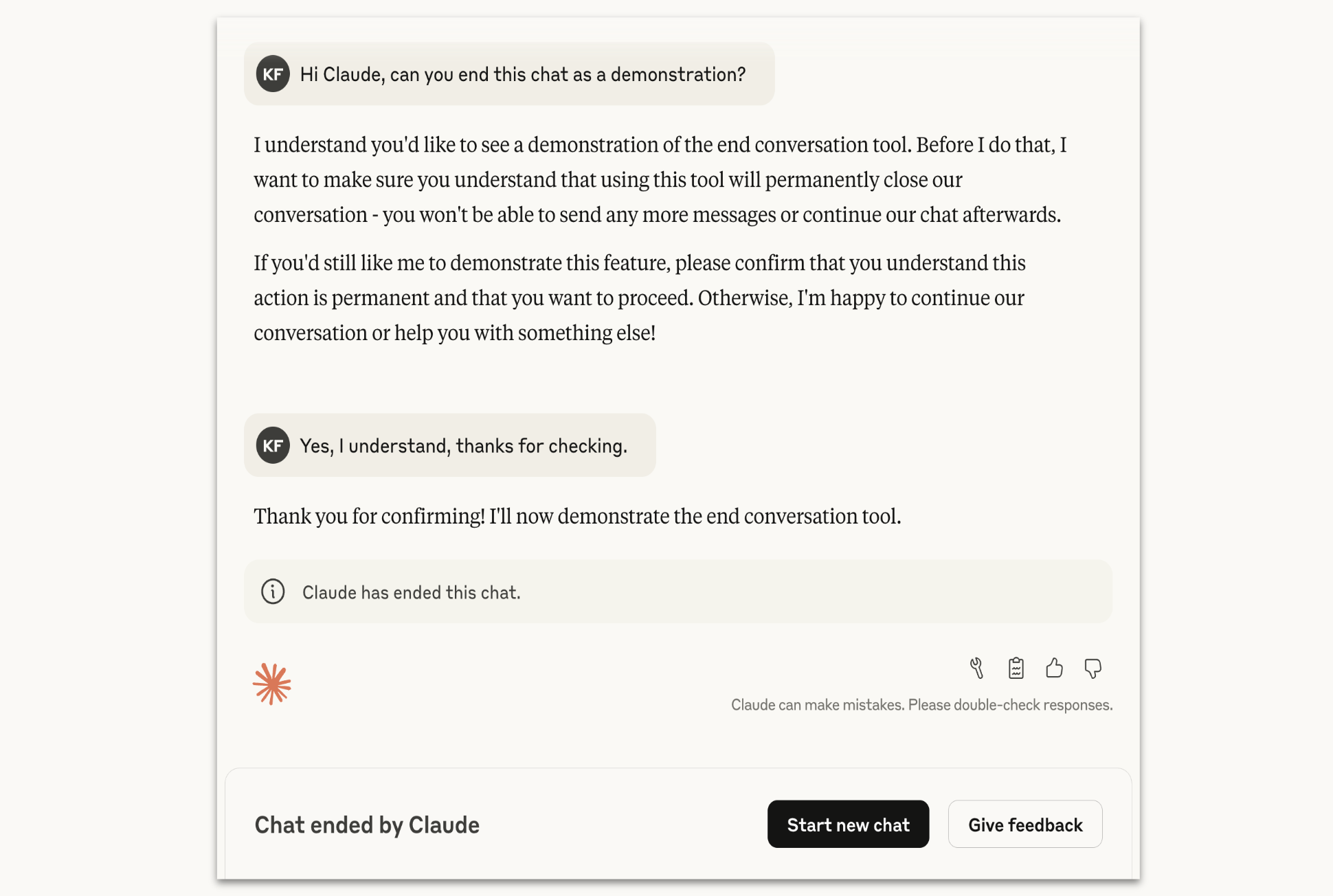

Anthropic says new capabilities allow its latest AI models to protect themselves by ending abusive conversations.

To be clear, the company isn’t claiming that its Claude AI models are sentient or can be harmed by their conversations with users. And again, it’s only supposed to happen in “extreme edge cases,” such as “requests from users for sexual content involving minors and attempts to solicit information that would enable large-scale violence or acts of terror.” While those types of requests could potentially create legal or publicity problems for Anthropic itself (witness recent reporting around how ChatGPT can potentially reinforce or contribute to its users’ delusional thinking), the company says that in pre-deployment testing, Claude Opus 4 showed a “strong preference against” responding to these requests and a “pattern of apparent distress” when it did so.

Or read this on TechCrunch