Get the latest tech news

Cosine Similarity Isn't the Silver Bullet We Thought It Was

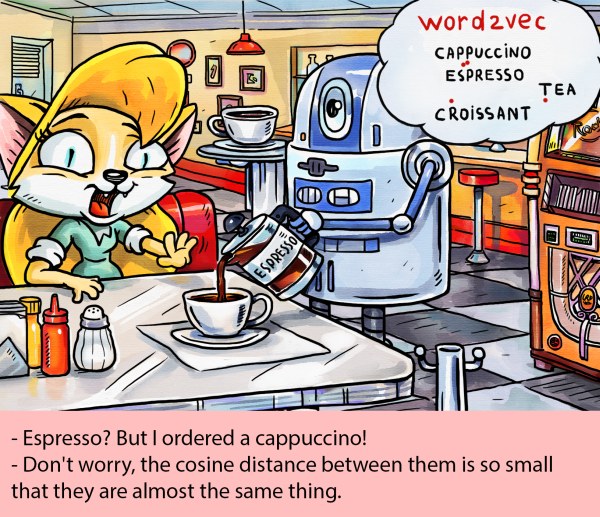

In the world of machine learning and data science, cosine similarity has long been a go-to metric for measuring the semantic similarity between high-dimensional objects. However, a new study by researchers at Netflix and Cornell University challenges our understanding of this popular technique, exposing the underlying issues that could lead to arbitrary and meaningless results. This is particularly concerning for recommendation systems and other AI models that rely on cosine similarity to quantify semantic similarity. As Netflix continues to innovate in enhancing user experiences, understanding these limitations is essential for refining algorithms to deliver more accurate and reliable insights, ultimately improving user engagement and satisfaction.

Its popularity stems from the belief that it captures the directional alignment between embedding vectors, supposedly providing a more meaningful measure of similarity than simple dot products. Image Source: AI Tech Trend(Modified from the original) However, the research team, led by Harald Steck, Chaitanya Ekanadham, and Nathan Kallus, has uncovered a significant issue: in certain scenarios, cosine similarity can yield arbitrary results, potentially rendering the metric unreliable and opaque. Netflix’s study, led by top researchers Harald Steck, Chaitanya Ekanadham, and Nathan Kallus, highlights the critical need for developers and the AI community to scrutinize widely accepted tools and techniques, particularly those underpinning recommendation systems, LLMs, and vector stores.

Or read this on Hacker News