Get the latest tech news

Energy-efficient AI model could be a game changer, 50 times better efficiency with no performance hit

AI up to this point has largely been a race to be first, with little consideration for metrics like efficiency. Looking to change that, the researchers trimmed...

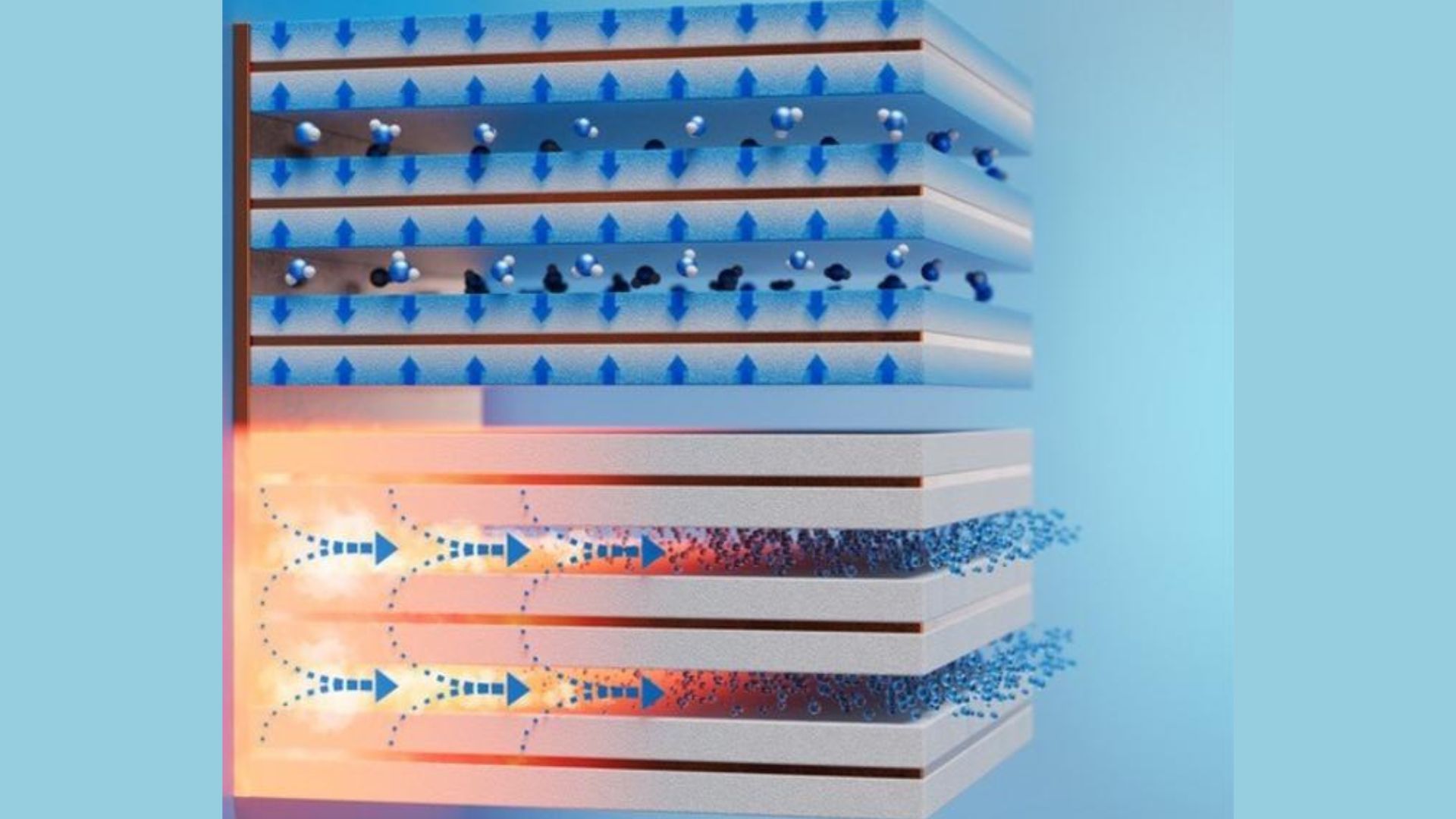

Cutting corners: Researchers from the University of California, Santa Cruz, have devised a way to run a billion-parameter-scale large language model using just 13 watts of power – about as much as a modern LED light bulb. This key change was inspired by a paper from Microsoft, and means that all computation involves summing rather than multiplying – an approach that is far less hardware intensive. With these sort of efficiency gains in play and given a full data center worth of power, AI could soon take another huge leap forward.

Or read this on r/technology