Get the latest tech news

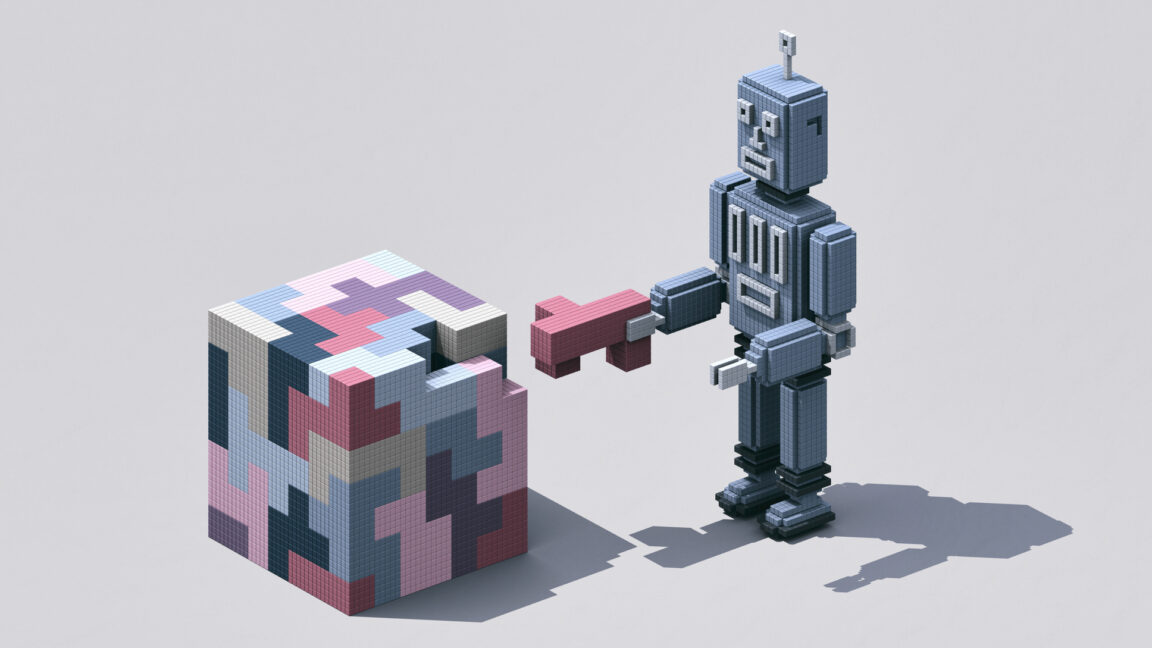

Evaluating LLMs playing text adventures

When we first set up the llm such that it could play text adventures, we noted that none of the models we tried to use with it were any good at it. We dreamed of a way to compare them, but all I could think of was setting a goal far into the game and seeing how long it takes them to get there.

It’s normal even for a skilled human player to immerse themselves in their surrounding rather than make constant progress. What we do need to be careful about is making sure the number of achievements in each branch is roughly the same, otherwise models that are lucky and go down an achievement-rich path will get a higher score. Thanks to this, the score we get out of this test is a relative comparison between models, not an absolute measure of how well the llm s play text adventures.

Or read this on Hacker News