Get the latest tech news

Extending the context length to 1M tokens

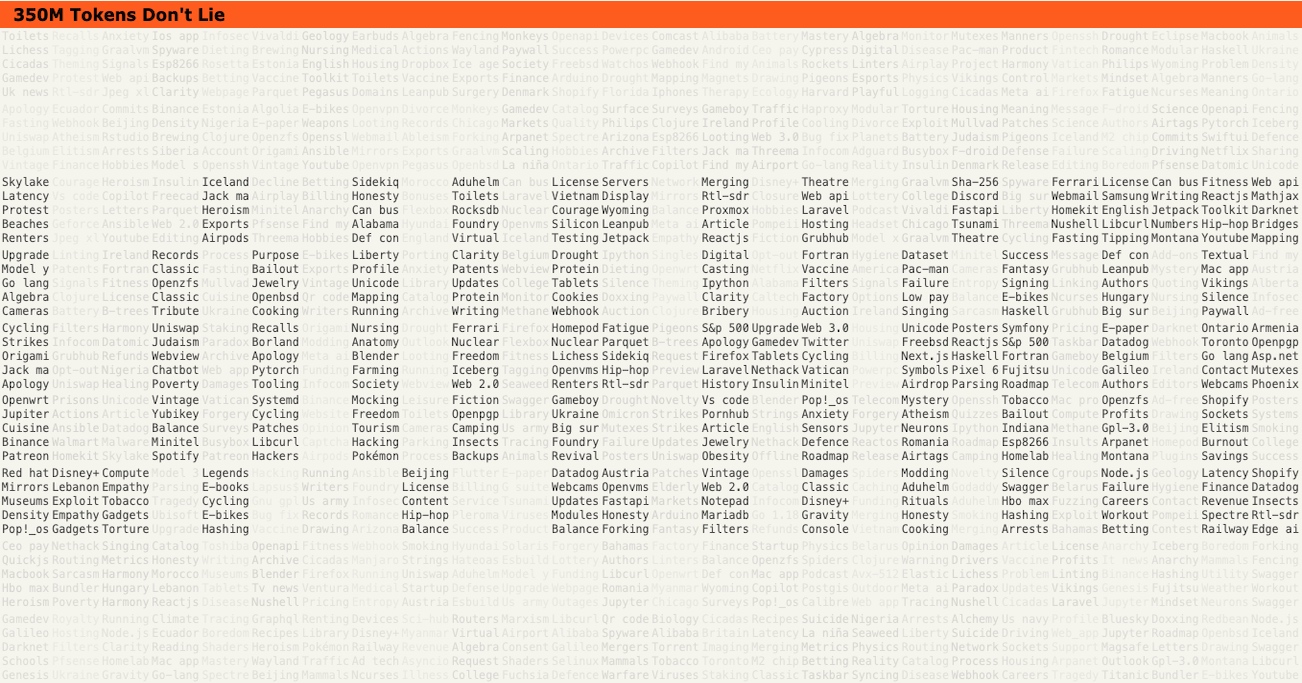

API Documentation (Chinese) HuggingFace Demo ModelScope Demo Introduction After the release of Qwen2.5, we heard the community’s demand for processing longer contexts. In recent months, we have made many optimizations for the model capabilities and inference performance of extremely long context. Today, we are proud to introduce the new Qwen2.5-Turbo version, which features: Longer Context Support: We have extended the model’s context length from 128k to 1M, which is approximately 1 million English words or 1.

(File: 2. minference.pdf) InfLLM: This paper presents a training-free memory-based approach to enable large language models to understand extremely long sequences by incorporating an efficient context memory mechanism. (File: 5. needlebench.pdf) RULER: This paper proposes a synthetic benchmark for evaluating long-context language models with diverse task categories, including retrieval, multi-hop tracing, aggregation, and question answering. However, we will actively explore further alignment of human preferences in long sequences, optimize inference efficiency to reduce computation time, and attempt to launch larger and stronger long-context models.

Or read this on Hacker News