Get the latest tech news

Going beyond GPUs: The evolving landscape of AI chips and accelerators

AI accelerators and chips can help enterprises support select low- to medium-intensive AI workloads with better total cost of ownership.

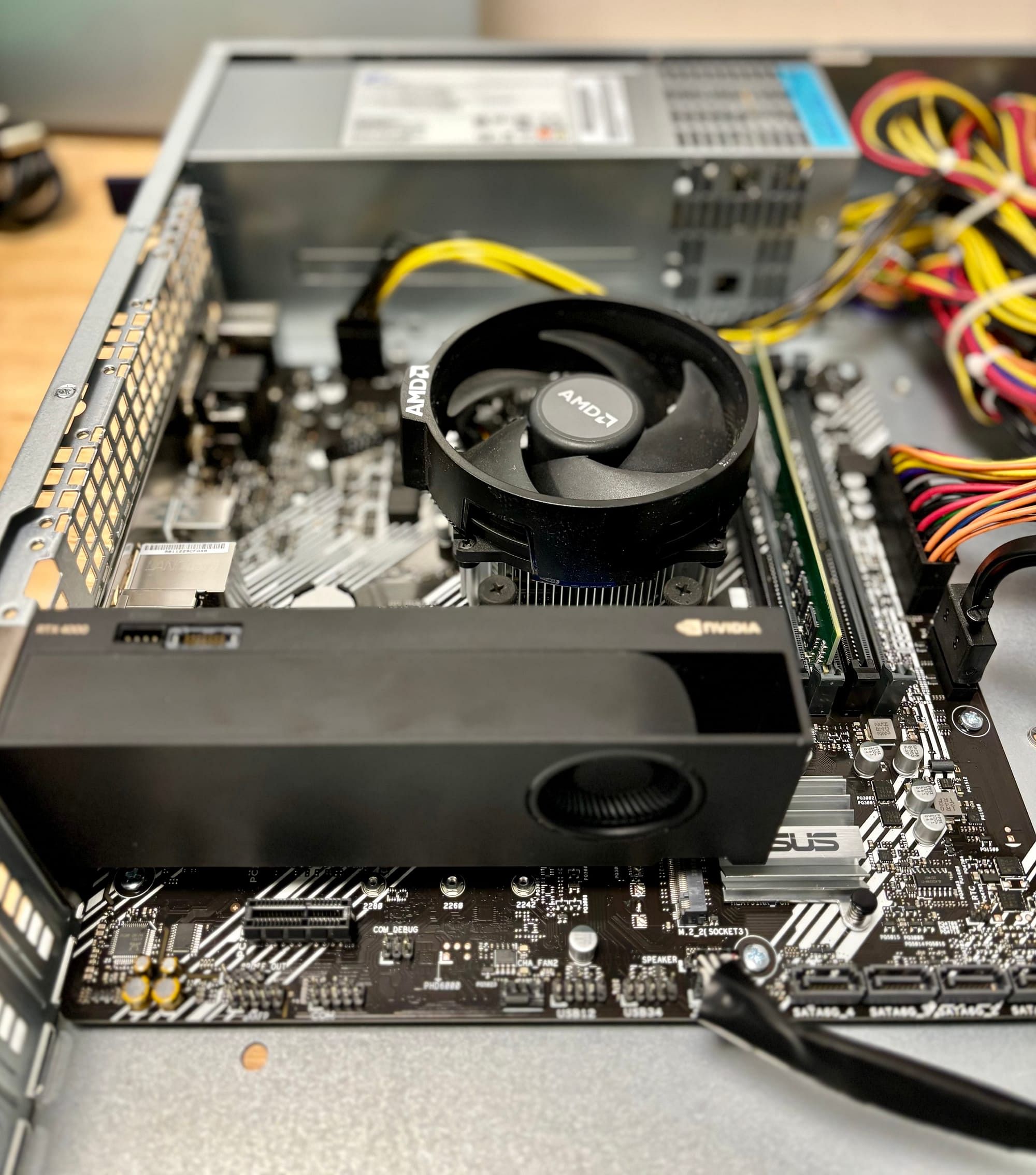

But, here’s the thing: while GPUs, with their parallel processing architecture, make a strong companion for accelerating all sorts of large-scale AI workloads (like training and running massive, trillion parameter language models or genome sequencing), their total cost of ownership can be very high. Currently, IBM follows a hybrid cloud approach and uses multiple GPUs and AI accelerators, including offerings from Nvidia and Intel, across its stack to provide enterprises with choices to meet the needs of their unique workloads and applications — with high performance and efficiency. In one case, Tractable, a company developing AI to analyze damage to property and vehicles for insurance claims, was able to leverage Graphcore’s Intelligent Processing Unit-POD system (a specialized NPU offering) for significant performance gains compared to GPUs they had been using.

Or read this on Venture Beat