Get the latest tech news

How big are our embeddings now and why?

Embedding sizes and architectures have changed remarkably over the past 5 years

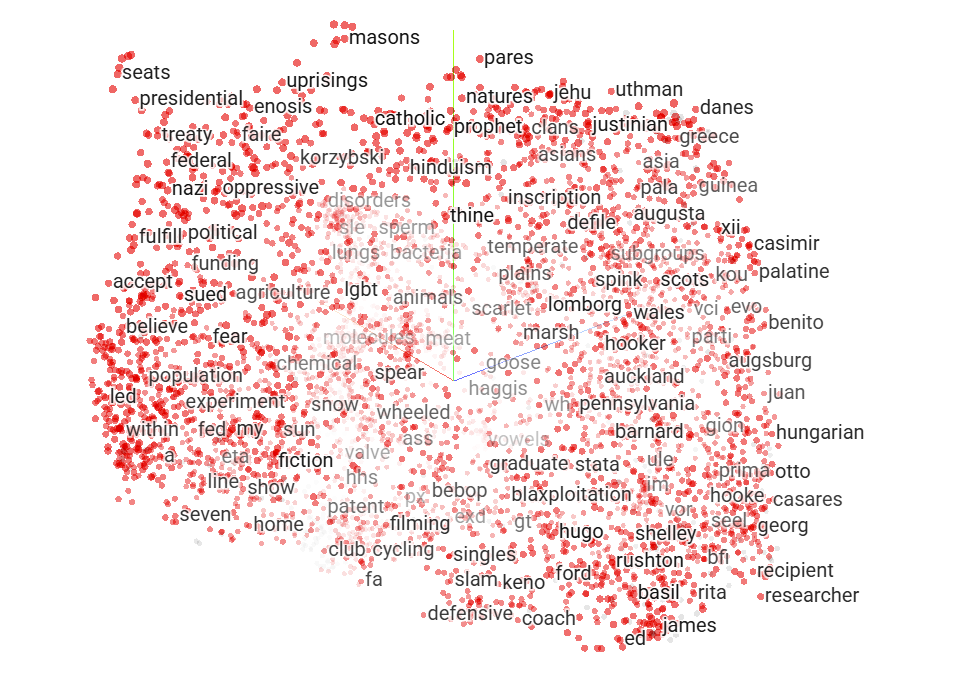

As a quick review, embeddings are compressed numerical representations of a variety of features (text, images, audio) that we can use for machine learning tasks like search, recommendations, RAG, and classification. When we talk about embeddings in industry these days, we generally mean trying to understand the properties of text, so how does this concept work with words? In fact, this is a fascinating area of study we are just starting to understand how these latent representations work through ideas like control vectors, a concept that Anthropic explored in the famous Golden Gate Claude paper.

Or read this on Hacker News