Get the latest tech news

How We Optimize LLM Inference for AI Coding Assistant

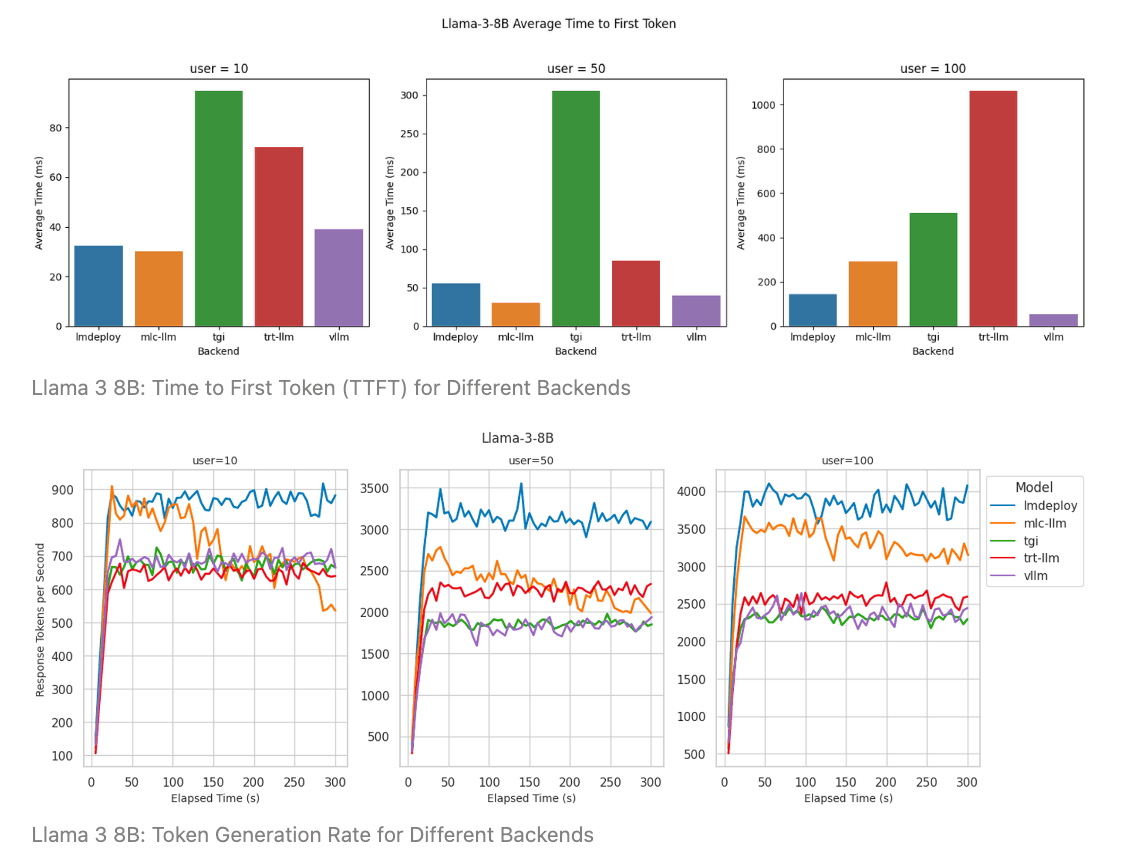

A technical blog post from Augment Code explaining their approach to optimizing LLM inference for code-focused AI applications. The post details how they achieved superior latency and throughput compared to existing solutions by prioritizing context processing speed over decoding, implementing token-level batching, and various technical optimizations. Key metrics include achieving <300ms time-to-first-token for 10k input tokens with Llama3 70B and maintaining >25% GPU FLOPS utilization. The post covers their technical architecture decisions, optimization process, and production system requirements.

Deployment sizes There are alternative batching strategies that split context processing and decoding into separate groups of GPUs and send the prefilled KV caches between them. Prior to Augment, at Google Research he was co-tech lead with Christian Szegedy, responsible for works like Memorizing Transformers and a precursor to Flash Attention. Carl Case is a research engineer who has spent the last decade working on improving deep learning systems by scaling training on accelerated hardware.

Or read this on Hacker News