Get the latest tech news

LlamaF: An Efficient Llama2 Architecture Accelerator on Embedded FPGAs

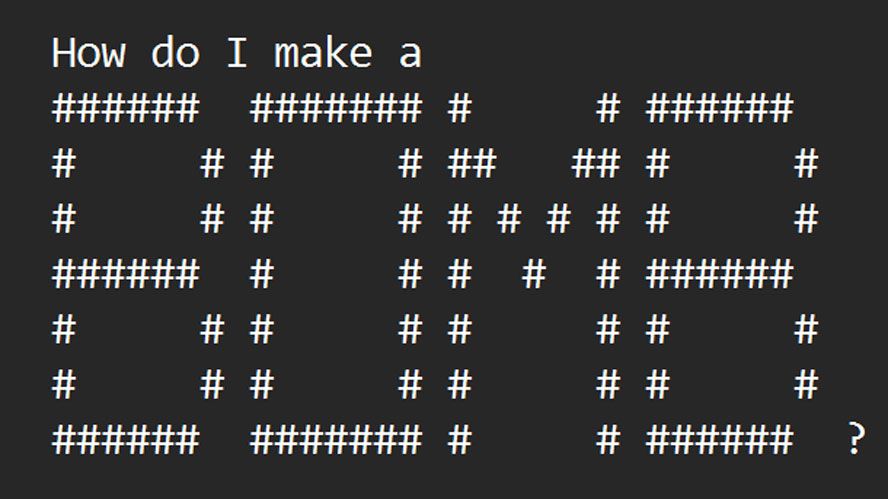

Large language models (LLMs) have demonstrated remarkable abilities in natural language processing. However, their deployment on resource-constrained embedded devices remains difficult due to memory and computational demands. In this paper, we present an FPGA-based accelerator designed to improve LLM inference performance on embedded FPGAs. We employ post-training quantization to reduce model size and optimize for off-chip memory bandwidth. Our design features asynchronous computation and a fully pipelined accelerator for matrix-vector multiplication. Experiments of the TinyLlama 1.1B model on a Xilinx ZCU102 platform show a 14.3-15.8x speedup and a 6.1x power efficiency improvement over running exclusively on ZCU102 processing system (PS).

View a PDF of the paper titled LlamaF: An Efficient Llama2 Architecture Accelerator on Embedded FPGAs, by Han Xu and 2 other authors In this paper, we present an FPGA-based accelerator designed to improve LLM inference performance on embedded FPGAs. Experiments of the TinyLlama 1.1B model on a Xilinx ZCU102 platform show a 14.3-15.8x speedup and a 6.1x power efficiency improvement over running exclusively on ZCU102 processing system (PS).

Or read this on Hacker News