Get the latest tech news

LLMs develop their own understanding of reality as their language abilities improve | In controlled experiments, MIT CSAIL researchers discover simulations of reality developing deep within LLMs, indicating an understanding of language beyond simple mimicry.

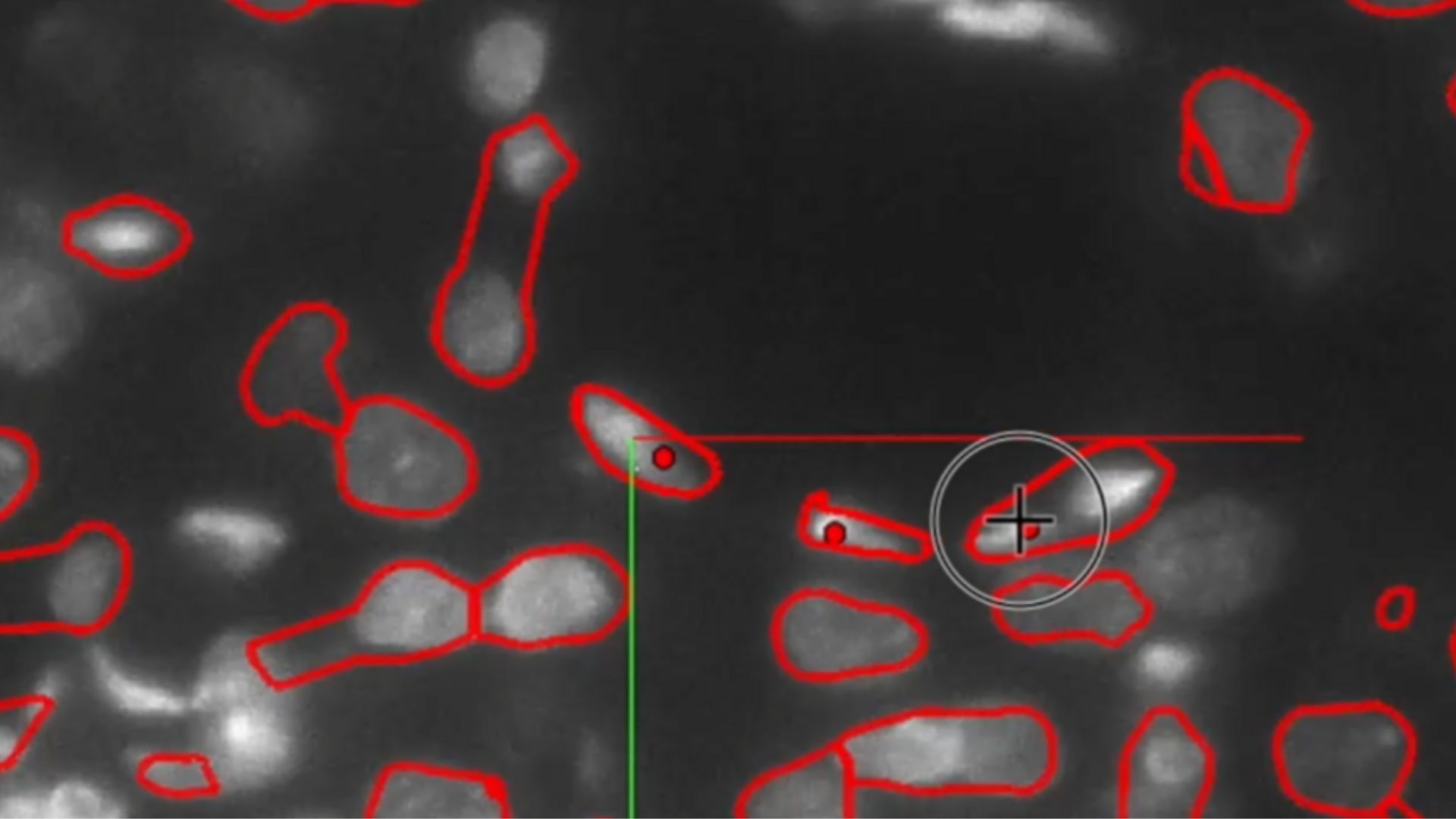

An MIT team used probing classifiers to investigate if language models trained only on next-token prediction can capture the underlying meaning of programming languages. They found that it forms a representation of program semantics to generate correct instructions.

Peering into this enigma, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have uncovered intriguing results suggesting that language models may develop their own understanding of reality as a way to improve their generative abilities. By the time we completed training, our language model generated correct instructions at a rate of 92.4 percent,” says MIT electrical engineering and computer science (EECS) PhD student and CSAIL affiliate Charles Jin, who is the lead author of a new paper on the work. “There is a lot of debate these days about whether LLMs are actually ‘understanding’ language or rather if their success can be attributed to what is essentially tricks and heuristics that come from slurping up large volumes of text,” says Ellie Pavlick, assistant professor of computer science and linguistics at Brown University, who was not involved in the paper.

Or read this on r/technology