Get the latest tech news

Nvidia releases a new small, open model Nemotron-Nano-9B-v2 with toggle on/off reasoning

Developers are free to create and distribute derivative models. Importantly, Nvidia does not claim ownership of any outputs generated...

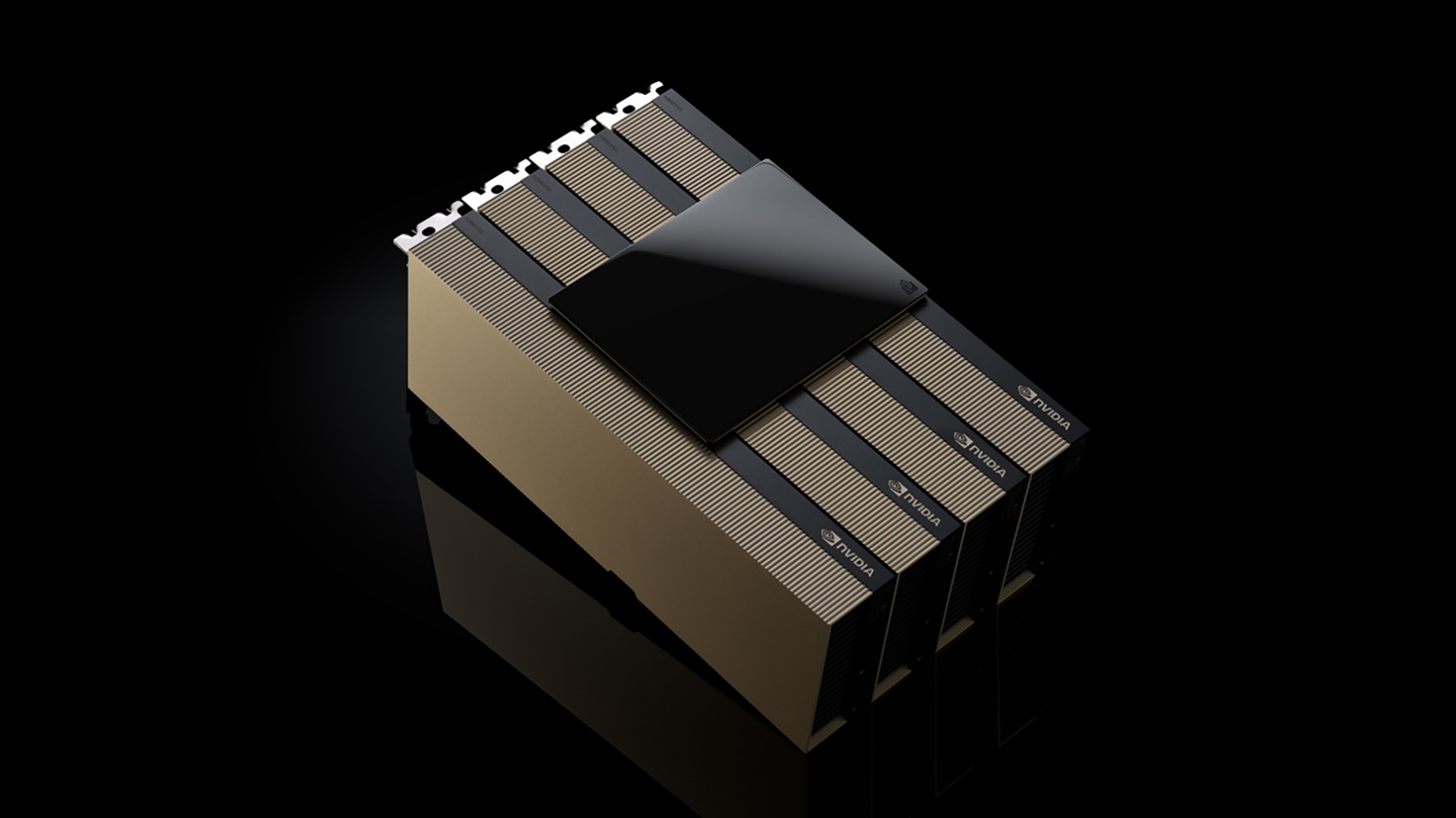

As Oleksii Kuchiaev, Nvidia Director of AI Model Post-Training, said on X in response to a question I submitted to him: “The 12B was pruned to 9B to specifically fit A10 which is a popular GPU choice for deployment. A h ybrid Mamba-Transformer reduces those costs by substituting most of the attention with linear-time state space layers, achieving up to 2–3× higher throughput on long contexts with comparable accuracy. For an enterprise developer, this means the model can be put into production immediately without negotiating a separate commercial license or paying fees tied to usage thresholds, revenue levels, or user counts.

Or read this on Venture Beat