Get the latest tech news

Opera is testing letting you download LLMs for local use, a first for a major browser

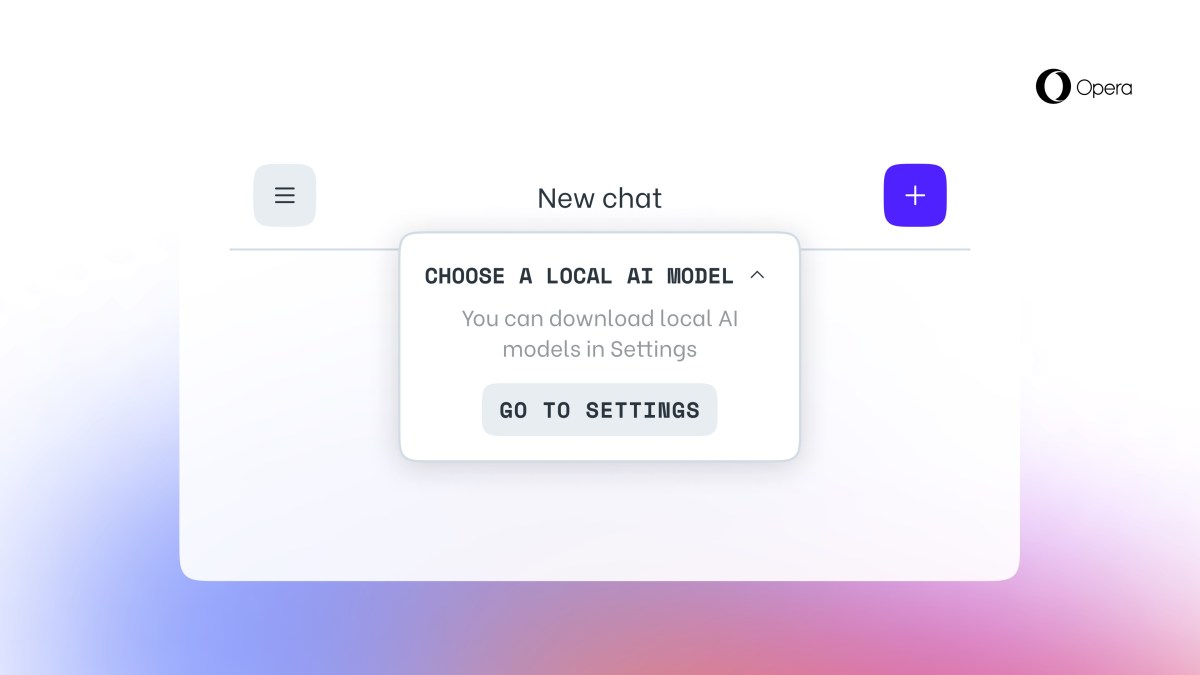

You can now use LLMs in Opera locally, meaning without having to send information to a server.

For the first time, according to an Opera press release, a major browser lets users download LLMs for local use through a built-in feature. A local LLM can also be a good bit slower than a server-based one, Opera warns, depending on your hardware's computing capabilities. Once that's done, open the Aria Chat side panel and select "choose local mode" from the drop down box at the top.

Or read this on r/tech