Get the latest tech news

Show HN: An open-source implementation of AlphaFold3

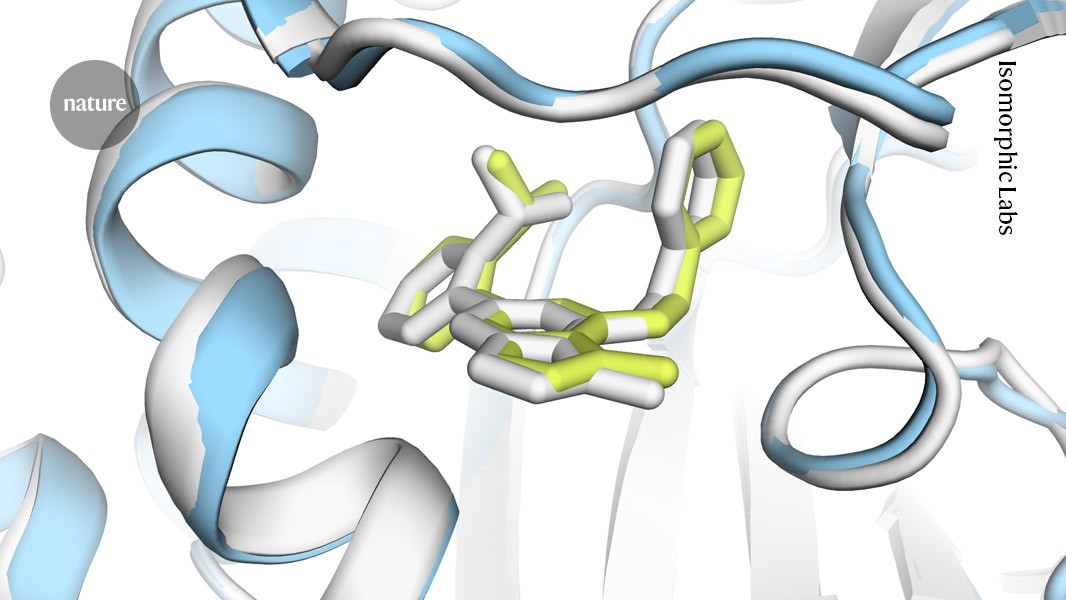

Open source implementation of AlphaFold3. Contribute to Ligo-Biosciences/AlphaFold3 development by creating an account on GitHub.

We think this is a simple typo in the Supplementary info that is due to an addition being typed as a multiplication -- in our implementation, we use the loss scaling factor consistent with Karras et al. (2022). We experiment with both and find that (within the range of steps we trained our models on) the DiT block with residual connections gives much faster convergence and better gradient flow through the network. For the AtomAttentionEncoder and AtomAttentionDecoder modules, we experimented with a custom PyTorch-native implementation to reduce the memory footprint from quadratic to linear, but the benefits were not that significant compared to a naive re-purposing of the AttentionPairBias kernel.

Or read this on Hacker News