Get the latest tech news

The Curious Similarity Between LLMs and Quantum Mechanics

Follow me on X at @robleclerc

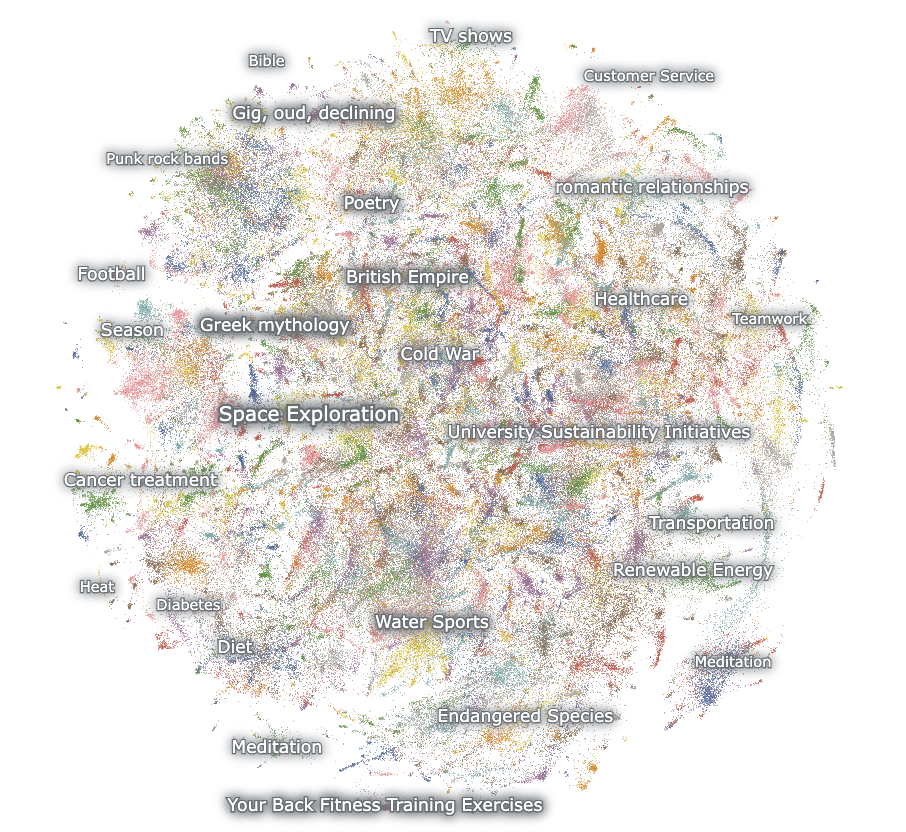

This got me thinking, are transformers proving to be so powerful because they’ve inadvertently captured key design principles of nature, giving them their open-endedness? Similarly, self-attention heads bind words across sentences like quantum entanglement, where “he” in one paragraph instantly locks onto “Bob” in another, no matter the distance. Even embedding vectors, those high-dimensional containers of meaning, behave like probability waves that collapse into definite interpretations.

Or read this on Hacker News