Get the latest tech news

When are SSDs slow?

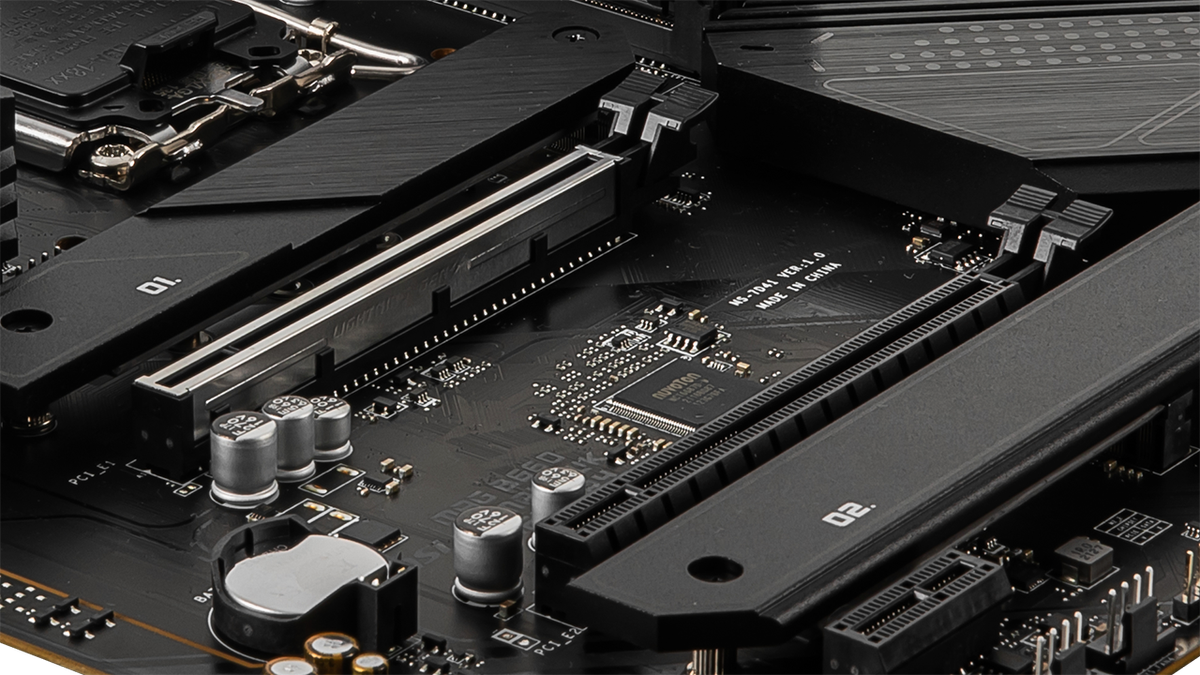

Why Your SSD (Probably) Sucks and What Your Database Can Do About It Database system developers have a complicated relationship with storage devices: They can store terabytes of data cheaply, and everything is still there after a system crash. On the other hand, storage can be a spoilsport by being slow when it matters most. This blog post shows how SSDs are used in database systems, where SSDs have limitations, and how to get around them.

If not, CedarDB will fall back to asynchronous commits, assuming it is running in a non-production environment where the small chance of data loss during a system failure is acceptable. If you cannot tolerate losing up to 100 milliseconds of data, you can optionally force an early flush of the journal when issuing a write, increasing the commit latency. PostgreSQL also offers a commit_delay configuration parameter which causes logs to be flushed every n microseconds, acting as a simple group commit mechanism without variable time windows.

Or read this on Hacker News