Get the latest tech news

Why I find diffusion models interesting?

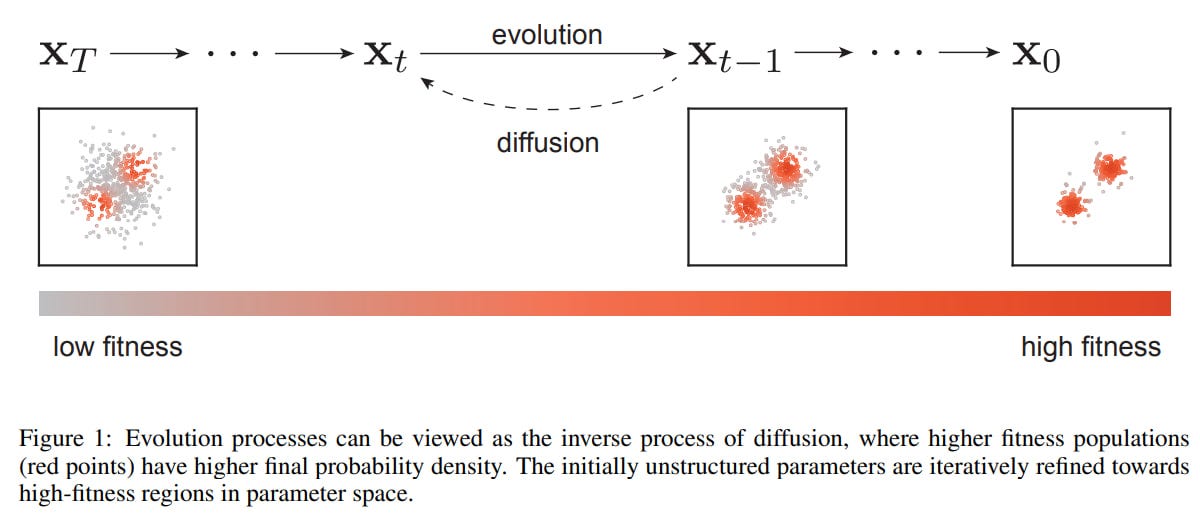

I stumbled across this tweet a week or so back where this company called Inception Labs released a Diffusion LLM (dLLM). Instead of being autoregressive and predicting tokens left to right, here you start all at once and then gradually come up with sensible words simultaneously (start/finish/middle etc. all at once). Something which worked historically for image and video models is now outperforming similar-sized LLMs in code generation.

I stumbled across this tweet a week or so back where this company called Inception Labs released a Diffusion LLM (dLLM). After spending the better part of the last 2 years reading, writing, and working in LLM evaluation, I see some obvious first-hand benefits for this paradigm: Planning, reasoning, and self-correction are a crucial part of agent flows, and we might currently be bottlenecked due to the LLM architecture.

Or read this on Hacker News